At the heart of these systems are orchestration and scheduling systems. While used interchangeably, orchestration and scheduling are distinct parts of a robust container infrastructure.

What are Orchestrators and Schedulers?

Orchestration refers to the coordinating and sequencing of different activities. Orchestration tools help to coordinate clustered pools of resources, they’re able to host containers, allocate resources to containers in a consistent manner, and allow the containers to work together in a predictable environment.

Scheduling refers to the assignment of workloads, where they can most efficiently run. Schedulers help to locate nodes which are capable of executing a container and provisioning the instance.

Container Orchestration and Scheduling

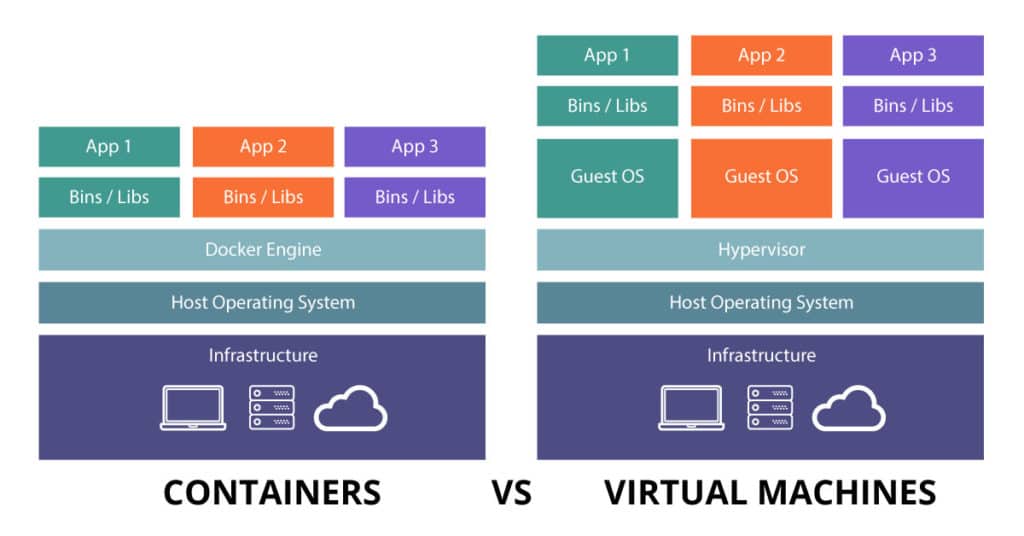

The benefits of containers are not limited solely to development. Containers can provide many of the benefits of virtualization, and they are being adopted as a powerful way of managing operations. Instead of maintaining complex infrastructure running on a host of different machines and architectures, quality and operations teams are increasingly able to consolidate workloads into “container-based clouds.”

Some of the goals of such clouds are to allow for the abstraction of resources at the infrastructure level through clustering. Containers can access CPU, memory, storage, and other assets, plus reserve specific resources, execute workloads, and monitor the health of deployments using specific APIs.

This removes some of the architectural challenges in the designing of complex applications such as those traditionally used in Big Data, Analytics, e-commerce, Internet of Things (IoT), etc. Rather than have to worry about deploying and maintaining sophisticated cluster software, architects and operations managers can instead focus on the architecture of the application.

Container as an Application and Management Unit

Container-based clouds achieve their goals by building management APIs around containers rather than machines (physical or virtual). Well-designed containers are “hermetic” and attempt to encapsulate almost all of an application’s dependencies. When done efficiently, the container usually only requires the Linux kernel system-call interface. This emphasis on the container makes it the fundamental unit of management. It provides the mechanisms for resource management (compute, memory, and storage), scaling, monitoring, and logging.

By shifting the infrastructure focus from machine to application, it is possible to abstract away the data-center (an important general goal of cloud computing):

• Teams can upgrade hardware and operating systems with minimal impact on applications.

• Application teams are freed from worrying about the specific details of machines and operating systems.

• Telemetry can be collected and analyzed in a centralized fashion.

• It’s possible to provide a convenient point to register generic APIs that enable the flow of information between the cloud’s management components and the application.

• The cloud can communicate information into the container such as resource limits, container metadata and configuration, and notices (which might provide termination warnings before migration or node maintenance).

• Identity of a container instance can be lined up with the identity of who deployed the instance across a cluster.

Start Your Transformation with DVO Consulting!

DVO Consulting is a privately-held, female-owned, national IT, business consulting, and staff augmentation firm founded and headquartered in Bountiful, UT. We also have offices in Great Falls, VA, which supports clients in Maryland, Virginia and Washington DC; covers east coast operations.

Contact us to learn more about containers and how DVO Consulting can help you take advantage of containerization to more rapidly delivery your software and better engage your customers.